ChatGPT Adds Lockdown Mode for Protection Against Prompt Injection Attacks

ChatGPT has added Lockdown Mode, "an optional, advanced security setting designed for a small set of highly security-conscious users—such as executives or security teams at prominent organizations—who require increased protection against advanced threats."

Specifically, the mode targets prompt injection attacks. Because LLMs can't distinguish between the developer's instructions and user input, a malicious actor can trick the LLM to ignore the developer's intentions and instead follow their new instructions.

These attacks can be incredibly destructive when combined with AI agents that can perform actions on behalf of their users such as managing files, sending emails, or browsing the web.

Trail of Bits demonstrated how vulnerable agentic browsers are to these types of attacks in a blog post a bit ago. They were able to exfiltrate data through some creative means.

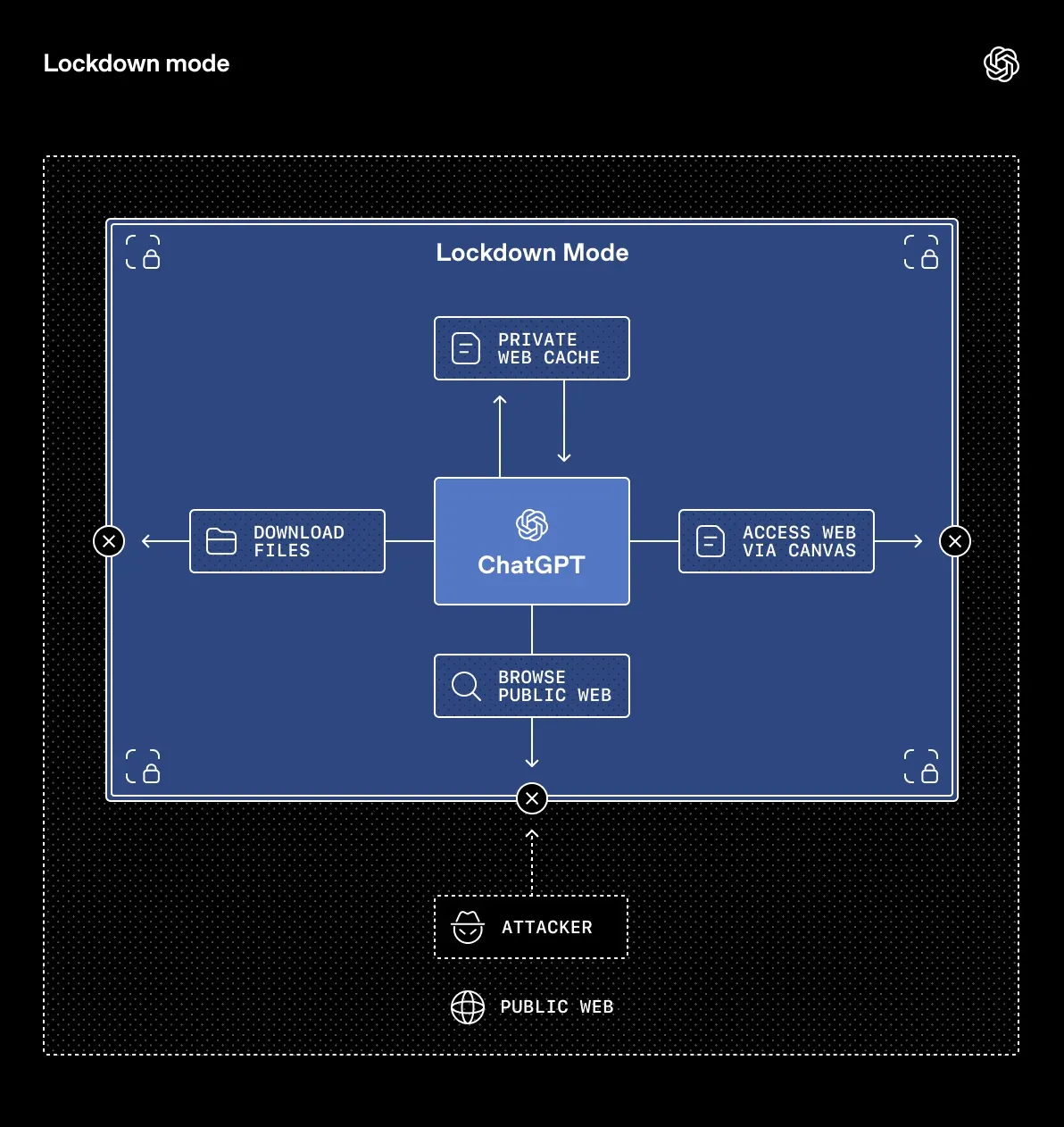

The mode works by disabling certain tools and features in ChatGPT that an attacker could use to exfiltrate data through a prompt injection attack.

Namely, Lockdown Mode limits ChatGPT's ability to access the live web and is instead restricted to cached versions. Image support is disabled for responses as well although users can still upload their own images and use the image generation feature. Deep research, an agentic feature for analyzing data from trusted sources, Agent Mode, which lets ChatGPT browse the web and perform actions for you, Canvas's ability to access the network, and file downloads are all disabled in Lockdown Mode.

Configuring these features in this way is designed to prevent them from being used for the final stage of prompt-injection driven data exfiltration attacks by deterministically preventing outbound network requests that could be sent to the attacker to transfer such data. Note that Lockdown Mode does not deterministically prevent prompt injections from reaching the context in the first place (e.g. a prompt injection could be in cached content accessed via web browsing), instead it is designed to prevent network requests that could be used to transfer sensitive data to an attacker.

Since apps can have network access and therefore exfiltrate data, they pose a big security risk. But, many apps are essential for certain workflows, so domain admins are given granular controls over which apps and which capabilities within those apps to enable.

For now, Lockdown Mode is limited to ChatGPT Enterprise, Edu, ChatGPT for Healthcare, and ChatGPT for Teachers. OpenAI says they plan to make it available to ChatGPT consumer and team plans in the coming months.

OpenAI says Lockdown Mode doesn't completely eliminate the risk of prompt injection attacks, only greatly reduces it.

In addition, new "Elevated Risk" labels have been added to features that OpenAI deems a security risk. They say once enough security mitigations are in place, the labels will eventually be removed.

These additions build on our existing protections across the model, product, and system levels. This includes sandboxing, protections against URL-based data exfiltration, monitoring and enforcement, and enterprise controls like role-based access and audit logs.

OpenAI had previously promised to buff up the security of ChatGPT and include client-side encryption to keep your chats safe:

Our long-term roadmap includes advanced security features designed to keep your data private, including client-side encryption for your messages with ChatGPT. We believe these features will help keep your private conversations private and inaccessible to anyone else, even OpenAI.

We're still waiting on that encryption for now.

Community Discussion